B+Trees are one the most common structures in the database world, especially in a context of indexing. They map well onto a page/block model used for persisting data on the hard drives and provide a nice "jack of all trades" route between capabilities (eg. sorting, range scans) and performance of HDD/SSD-aware reads and writes.

That being said, B+Tree construction assumes that indexed data can be easily composed in a single, sequential order. That's not always possible, as some types (eg. map coordinates) doesn't offer a single order, that could be used to efficiently perform range scans (eg. all points within given area) over indexes constructed using B+Trees.

To address the issue above, a new data structures - known as R(ange)-Trees - have been proposed. In this blog post we'll discuss how do they work and how to implement them.

How tree is organized

From top-level overview R-Tree structure is not much different from a traditional balanced tree, which is foundation of some of the most popular databases that are currently in use. In fact often R-Tree is implemented on top of B+Tree storage layer (eg. Postgres). For those of you, who are not familiar with either, let's roughly discuss similarities first:

- Our tree is a hierarchical structure, which consists of nodes which can be either leaves (stored at level 0) or branches (stored at higher levels).

- Values are always stored in leaves, never in branches.

- Nodes have a maximum capacity of children nodes. Once this capacity is reached and a new child has to be inserted, its parent branch needs to be split first in two and new child is added to one of the halves created this way.

- Branches don't store values, but they can store keys, which are used to navigate which child nodes we're looking for further down the tree structure.

Now there are few things specific for R-Trees:

- R-Tree allows keys stored in branches to have a different structure than actual keys called for insertion. We'll discuss that in detail soon.

- While B-Trees guarantee that values stored inside are maintaining a total order, it's not that easy here. Reason is that R-Tree are used to store multi-dimensional data, which are not easily representable in linear order.

- For the reason above finding which child nodes to go to during scans requires checking out all of the children within particular node before descending down. For this reason performance of R-Tree may be worse than that of B+Tree.

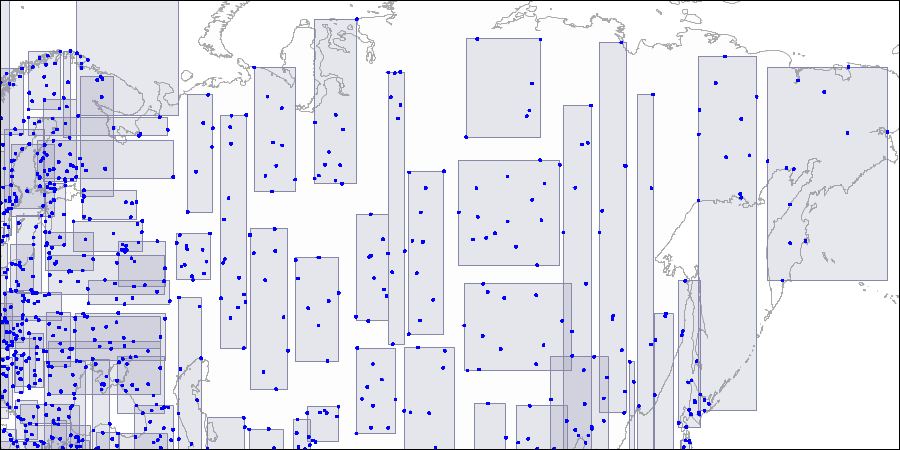

The whole idea here is that each branch key in a tree is defined as a minimal bounding space (or set, or range), that contains all of its children keys. This works even if leaves and branches store data of different type eg. leaves can store geo-location data (points/coordinates), then branch keys are represented by rectangular areas bounding these points. This idea works recursively - higher-level branch keys are rectangles bounding rectangles of lower-level ones:

Here, we'll make a simple R-Tree implementation. We'll only cover 2-dimensional space, but keep in mind it's possible to extend R-Tree to cover any number of dimensions: if you're interested you can take a look at swim-rust R-Tree implementation, which provides a generic support for 2D and 3D spaces over a single codebase, and provide higher-dimension generalization from there.

We'll need to provide some foundational data structures. For our purposes we'll need just idea of point and rectangle.

type Point = { x: float; y: float }

type Rect =

{ low: Point // lower-left point

high: Point } // higher-right point

type Spatial =

abstract Boundary: Rect

We also defined a dedicated Spatial interface - this way we will be able to store any data type, as long as we can wrap it with minimum bounding rectangle. For a single point elements, we can imagine rectangles with lower-left and higher-right points being equal to each other.

Now it's time to define our tree structure. Our R-Tree consists of nodes which we'll be able to divide into leaves (containing key-value entries) and branches containing many children - be it leaves or other branches. We'll also mark each node with a number informing how high in the tree hierarchy it lives: leaves are always at level 0, while tree root is always at the highest level.

type Node<'k,'v when 'k :> Spatial> =

{ level: int // node-depth level - 0 for leaf nodes

entries: Entry<'k,'v>[] } // child entries

and Entry<'k,'v when 'k :> Spatial> =

| Leaf of key:'k * value:'v

| Branch of key:Rect * Node<'k,'v>

type RTree<'k,'v when 'k :> Spatial> =

{ root: Node<'k,'v>

config: Config }

Keep in mind, that while only leaf nodes contain the values, keys are present in both leaves and branches. However, branch keys (for our 2 dimensional space represented by rectangles) are describing so called minimum bounding rectangle which is sufficient to cover all of the children keys stored within that parent.

In this blog post we'll cover 3 most crucial operations, that R-Tree should expose: search for elements in the area, insert/update and remove. We'll start with search.

Range scan

The most important operation in R-Tree arsenal is the ability to search for all inserted elements, which keys can be found within given area. Here we assume that our area is determined by a rectangular space, but in practice you could abstract this behaviour over any data type that defines two operations:

contains a bwhich informs if shapebfully belongs to an area determined by shapea. This is also true ifaequalsb.insertects a bwhich informs that at least one of the points of shapebcan be found within shapea.

While we're used to think about these in terms of shapes of 2D or 3D space, this behaviour could be generalized - we'll discuss that in summary.

Our search function will recursively descend from root node downwards, adding all leaf entries which match contains criteria into the result set. In order to not search through all of nodes in a tree, we simply skip the nodes that neither contains nor intersects with the area we're looking for.

module Node

let search (area: Rect) (n: Node<'k,'v>) =

let rec loop (result: ResizeArray<'v>) (area: Rect) (n: Node<'k,'v>) =

for e in n.entries do

match e with

| Leaf(key, value) when Rect.contains area key.Boundary ->

// leaf key fits into `area`, add it to result set

result.Add value

| Branch(r, child) when Rect.contains area r || Rect.intersects area r ->

// there are children within this branch, that fit into `area`

loop result area child

| _ -> () // there are no shared points, skip that node

let found = ResizeArray()

loop found area n

found.ToArray()

Element insertion

Since we're already passed over search - which was fairly simple - now we're jump into deep waters and discuss insert/update algorithm. The reason behind its complexity lies in node splitting semantics. It's mandatory, as we don't want our node's children to grow up without bounds. Since R-Tree searching requires not only recursively descending through nodes and their children, but also to perform a check over every child of a picked node, whereas B+Tree algorithm only checks children until a first child key doesn't meet the criteria of our search query.

Here we're using a configurable values of a maximum number of children each node can hold, kept inside of config.maxCap property. First let's cover adding a new Leaf item assuming that we found a branch fit to become it's parent:

module Node

let private addLeaf config item node =

let rect = Entry.boundary item

// try find entry matching added item's key

let idx =

node.entries

|> Array.tryFindIndex (function Leaf(k, _) -> k.Boundary = rect | _ -> false)

match idx with

| None ->

// item with that key didn't exist in R-Tree,

// push it at the end of children list

let entries = Array.insertAt node.entries.Length item node.entries

let node = { node with entries = entries }

if n.entries.Length > config.maxCap then

// we surpassed capacity of node, we need to split it

let struct(l, r) = split config node

Split(l,r)

else

NoSplit node

| Some idx ->

// we're updating an existing entry

let node = { node with entries = Array.replace idx item n.entries }

NoSplit node

Here, we're using a Split/NoSplit result value to inform, if we had to split existing node in order for it to accommodate inserted leaf without violating max children capacity. Details on how it happens are hidden behind split function called above. We'll cover it later.

We covered how to add a leaf item to a branch node, but the question remains: given a list of children, how do we determine which one will be a correct choice to insert a leaf into? Again R-Tree key order is not total, therefore it's possible to have several candidates to pick from.

The rule we use here is as follows: the best candidate is branch, which minimum bounding rectangle will expand by the smallest factor after inserting a new entry. That's because we want to keep these minimum bounding rectangle... well, minimal. The smaller they remain, the less chance they will overlap with others at the same level and more accurate they are. We can easily calculate that factor by taking the difference between surface areas of a current branch bounding rectangle and rectangle which would encapsulate inserted element.

module Node

let private addBranch item n =

let mutable minEntry = n.entries.[0]

let mutable minEntryIdx = 0

let itemBoundary = (Entry.boundary item)

let mutable minRect = Rect.merge (Entry.boundary minEntry) itemBoundary

let mutable minDiff = Rect.area minRect - Rect.area (Entry.boundary minEntry)

for i in 1..(n.entries.Length-1) do

let candidate = n.entries.[i]

let bound = Entry.boundary candidate

let expanded = Rect.merge (Entry.boundary item) bound

// calculate the difference of boundary area increase in case if current

// candidate would be picked to host an item

let diff = Rect.area expanded - Rect.area bound

if diff < minDiff then

// area difference is smaller than the last best candidate

minDiff <- diff

minRect <- expanded

minEntry <- candidate

minEntryIdx <- i

let (Branch(_, child)) = minEntry

(child, minRect, minEntryIdx)

With two functions defined above, we need to combine them together two work in generic and recursive manner:

module Node

let rec add (config: Config) level (item: Entry<'k,'v>) (n: Node<'k,'v>) : Split<'k,'v> =

match item with

| Branch _ when level = n.level ->

let n = { n with entries = Array.insertAt n.entries.Length item n.entries }

if n.entries.Length > config.maxCap then

// we surpassed capacity of node, we need to split it

let struct(l, r) = split config n

Split(l,r)

else

NoSplit n

| _ ->

if isLeaf n then

// add leaf node

addLeaf config item n

else

// descent recursivelly

let (child, minRect, minEntryIdx) = addBranch item n

match add config level item child with

| Split(first, second) ->

// since our R-Tree is immutable we need produce a new copy of entries

// array with updated child being removed

let entries = Array.removeAt minEntryIdx n.entries

let n = { n with entries = Array.append entries [|first;second|] }

if n.entries.Length > config.maxCap then

// because of the split from children, we surpassed children capacity

// of a current node, we need to split it and propagate result to a parent

let struct(l, r) = split config n

Split(l,r)

else

NoSplit n

| NoSplit node ->

let e = Branch(minRect, node)

let n = { n with entries = Array.replace minEntryIdx e n.entries }

NoSplit n

The most of this function complexity comes from the need of updating list of nodes children recursively, which may require splitting blocks, which itself eventually may also lead to recursive splitting of parent nodes as well.

Splitting the node

So far we were skipping details of node splitting algorithm. Now it's the time to cover it. As we already witnessed, if adding new child node resulted in moving over allowed number of children, we split parent in two halves, each one containing a (fair) subset of children of original parent.

Again, the major challenge is lack of linear order, that we could use to simply set a split point within our list of children. We'll discuss two splitting strategies: linear and quadratic. They both share common characteristic: *we start by defining two groups with "seed" keys taken from original parent children, and then incrementally drain children one by one and assign them to the group that's "closer", until all children are drained.

We could ask ourselves: this is pretty straightforward, so what's the difference between strategies we use? It all boils down to deciding on two things:

- How to pick two seed nodes.

- Determine what "closer" means.

We'll cover both of these later on. For now let's present a basic skeleton of our splitting function:

module Node

/// Each split group consists of children entries and info about

/// area that covers all of them

type Group<'k,'v when 'k :> Spatial> = (Entry<'k,'v>[] * Rect)

let split (config: Config) (n: Node<'k,'v>) =

let split config (entries: Entry<'k,'v>[]) : (Group<'k,'v> * Group<'k,'v>) =

// pick indexes of seed nodes

let (i1, i2) =

match config.strategy with

| SplitStrategy.Linear -> SplitStrategy.linear entries

| SplitStrategy.Quadratic -> SplitStrategy.quadratic entries

let entries = ResizeArray(entries)

// pick seed nodes

// `i2` is always greater than `i1`

let seed2 = entries.[i2]

let seed1 = entries.[i1]

entries.RemoveAt i2

entries.RemoveAt i1

// first split group

let mutable bound1 = Entry.boundary seed1

let group1 = ResizeArray<_>(config.minCap)

group1.Add seed1

// second split group

let mutable bound2 = Entry.boundary seed2

let group2 = ResizeArray<_>(config.minCap)

group2.Add seed2

while entries.Count > 0 do

//TODO: drain entries into groups

// once all entries have been drained let's return newly

// created split groups

let first: Group<'k,'v> = (group1.ToArray(), bound1)

let second: Group<'k,'v> = (group2.ToArray(), bound2)

(first, second)

// split node entries into two new branch nodes

let ((group1, bound1), (group2, bound2)) = split config n.entries

let first = Entry.Branch(bound1, { level = n.level; entries = group1 })

let second = Entry.Branch(bound2, { level = n.level; entries = group2 })

(first, second)

The code above is basically responsible for creating split groups, initializing them with seed nodes and then composing the results once everything is done. We deliberately have left the body of entry drain loop empty, so we can talk about it now.

// drain entries into groups

while entries.Count > 0 do

// if we have just enough remaining entries to fit

// minimun capacity of any group, there's no need to pick

// just push all entries into that group

if entries.Count + group1.Count = config.minCap then

for e in entries do

bound1 <- Rect.merge bound1 (Entry.boundary e)

group1.Add e

entries.Clear ()

elif entries.Count + group2.Count = config.minCap then

for e in entries do

bound2 <- Rect.merge bound2 (Entry.boundary e)

group2.Add e

entries.Clear ()

else

// if we still have plenty of entries, use algorithm to pick a group

let (idx, expanded, group) =

match config.strategy with

| SplitStrategy.Linear -> SplitStrategy.nextLinear entries bound1

| SplitStrategy.Quadratic -> SplitStrategy.nextQuadratic entries bound1 bound2 group1.Count group2.Count

// pick entry choosen by split strategy and assign it to its new group

let e = entries.[idx]

entries.RemoveAt idx

if group = 0 then

bound1 <- expanded

group1.Add e

else

bound2 <- expanded

group2.Add e

Let's start from the else block: here we use our strategy-specific algorithm, which returns a tripple of:

- Index of entry that has been picked.

- New rectangular boundary of that entry, expanded by the key of picked entry.

- Number of a group, picked entry should be inserted into.

Another piece of this loop are remaining if statements. They come from one of the requirements of B+Tree/R-Tree: when splitting node in two, we want to assign children fairly, meaning each of the split groups should have at least a configured minimum (config.minCap) number of children - which typically is a half of maximum capacity, but it's not the strict rule. Without it our algorithm could run wild and split children into eg. 90% / 10% of each group capacity, which would result in unbalanced tree.

Linear split

Now it's the time to discuss different splitting heuristics. We'll start with linear splits.

The core idea behind linear split is: find two seed nodes from elements that are the most far away from each other, then just assign next entry to one of the nodes at random. In order to calculate that distance, we'll define a supporter structure, that we'll use to calculate the min/max values of boundaries (each rectangular boundary is determined by its lower-left and higher-right points) for every single dimension. Since we're operating in 2D space, we're going to use two of such structures.

/// Struct storing statistics for a single dimension

[<Struct;IsByRefLike>]

type private DimStats =

{ mutable minLow: float // minimum value of left-lower point dimension

mutable maxHigh: float // maximum value of righ-higher point dimension

mutable maxLow: float // maximum value of left-lower point dimension

mutable lowIndex: int // index of an entry having value above

mutable minHigh: float // minimum value of left-lower point dimension

mutable highIndex: int } // index of an entry having value above

[<RequireQualifiedAccess>]

module private DimStats =

let create () =

{ minLow = Double.MaxValue

maxHigh = Double.MinValue

maxLow = Double.MinValue

minHigh = Double.MaxValue

lowIndex = 0

highIndex = 0 }

let inline farest (s: DimStats inref) = Math.Abs(s.maxHigh - s.minLow)

let inline nearest (s: DimStats inref) = Math.Abs(s.minHigh - s.maxLow)

Keep in mind, that this approach doesn't limit us to 2D space - 3D shapes that could be written into a cubic shape, which also needs only two points to keep a proper shape definition - the only difference is that these points use 3 coordinates (x,y,z) instead of two (x,y). We can potentially abstract this to work with any number of dimensions.

Updating our dimension statistics structure is fairly simple:

/// Update stats based on dimension of lower-left (`lo`) and higher-right (`hi`)

/// corners of a boundary at given index `i`.

let inline computeDim (s: DimStats byref) (lo: float) (hi: float) (i: int) =

s.minLow <- Math.Min(s.minLow, lo) // update minimum lower-left seen so far

s.maxHigh <- Math.Max(s.maxHigh, hi) // update maximum higher-right seen so far

if lo > s.maxLow then

// if we found new maximum lower-left, cache index of its entry

s.maxLow <- lo

s.lowIndex <- i

if hi < s.minHigh then

// if we found new minimum lower-left, cache index of its entry

s.minHigh <- hi

s.highIndex <- i

Our linear seed pick relies on calculating nearest and farest distance for a given dimension. Then we normalize these values and compare each dimension as follows:

module SplitStrategy

let linear (entries: #IReadOnlyList<Entry<'k,'v>>) =

let mutable x = 0 // index of first seed node

let mutable y = 1 // index of second seed node

let mutable dx = DimStats.create () // Entry statistics on `x` dimension

let mutable dy = DimStats.create () // Entry statistics on `y` dimension

// compute statistics of all entries for each dimension

if entries.Count > 2 then

for i in 0..(entries.Count-1) do

let e = entries.[i]

let rect = Entry.boundary e

computeDim &dx rect.low.x rect.high.x i

computeDim &dy rect.low.y rect.high.y i

// compute normalized ratio between farest and nearest distance

// for each dimension

let farX, farY = DimStats.farest &dx, DimStats.farest &dy

let nearX, nearY = DimStats.nearest &dx, DimStats.nearest &dy

let normX, normY = (nearX / farX), (nearY / farY)

let lowIdx, highIdx = if normX > normY then dx.lowIndex, dx.highIndex else dy.lowIndex, dy.highIndex

x <- lowIdx

y <- highIdx

let cmp = x.CompareTo y

if cmp < 0 then (x, y)

elif cmp > 0 then (y, x)

elif x = 0 then (0, 1)

else (0, y)

You may notice that our algorithm for picking the farest boundaries may not be perfect - the winner seed nodes are based by the biggest normalized distance as understood by a single normalized dimension. However this is ok. We don't need an ideal response, but one that's fairly acceptable while being fast to compute.

In terms of assigning entries to each split groups, we simply assign them at random. In linear approach we prioritize speed over accuracy.

module SplitStrategy

let inline nextLinear (entries: #IReadOnlyList<Entry<'k,'v'>>) boundary =

let rect = Rect.merge boundary (Entry.boundary entries.[0])

(0, rect, 0)

If you're confused with code above, let me explain: entries represent children which don't have established key-order. As this function works in context of split function defined before, we just always assign the next entry to a first group. Once the number of remaining entries will go low enough to fill up the second group up to reach minimum expected node capacity, the split function itself will assign them to the other split group.

While linear split is not perfect in terms of boundary assignment - because of randomized split group construction - the simplicity of the nextLinear function makes it very fast to assign new items, making it a good strategy whenever a churn of added/removed entries is high.

Quadric split

Quadratic split strategy is oriented around the idea of shape area size - you can think of shape area as an equivalent of distance between two points. We assign the node to a group that would expand the minimum bounding rectangle of elements in that group by a smallest factor (which means, they have the smallest distance from seed). On the other side we pick seed nodes, which minimum bounding rectangle area is the highest (which is equivalent of them being the most "far away" from each other). How does it work?

We given multidimensional space can assume that the bigger area of bounding rectangle between two elements, the further away they are from each other. On the other side the seeds themselves should have as small area as possible - otherwise we could just peek a seed node with enormous size (eg. equal to entire search space), which would bias second part of our algorithm and cause all entries to be assigned to that seed's group (as if wouldn't cause it's area to increase, making it the most optimal).

module SplitStrategy

let quadratic (entries: #IReadOnlyList<Entry<'k,'v>>) =

let mutable x = 0

let mutable y = 1

let mutable maxDiff = Double.MinValue

if entries.Count > 2 then

for i in 0..(entries.Count-1) do

for j in 1..(entries.Count-1) do

let first = Entry.boundary entries.[i]

let second = Entry.boundary entries.[j]

let combined = Rect.merge first second

// calculate the difference between area of minimum bounding rectangle

// of two elements the the area of the elements themselves

let diff = (Rect.area combined) - (Rect.area first) - (Rect.area second)

if diff > maxDiff then

// the winner is the biggest area difference

maxDiff <- diff

x <- i

y <- j

(x, y)

The second function is responsible for picking next entry from a list of children and assigning it to one of two groups of choice. Unlike linear approach, picking a correct group for every entry in quadratic approach depends on computing how much each of the groups boundaries (reminder: we use them as keys for Branch nodes) and picking the one that would expand its boundary the most.

First: how to we calculate the expansion ratio of entry being added to a group boundary? It's simply a difference between the area of that minimum bounding rectangle with and without an entry included in a specific group.

module SplitStrategy

let private preference (e: Entry<'k,'v>) rect1 rect2 =

let bound = Entry.boundary e // boundary of current entry

// compute min bounding rectangles that would be required after adding

// an entry to each of the groups

let wrap1 = Rect.merge rect1 bound

let wrap2 = Rect.merge rect2 bound

// compute how much each of the groups boundaries would grow by adding

// the current entry

let diff1 = Rect.area wrap1 - Rect.area rect1

let diff2 = Rect.area wrap2 - Rect.area rect2

(diff1, wrap1, diff2, wrap2)

With that set, let's now implement a decider function:

module SplitStrategy

let private selectGroup rect1 rect2 len1 len2 diff1 diff2 =

// first pick by minimal bounding area expansion...

if diff1 < diff2 then 0

elif diff2 < diff1 then 1

// ...otherwise pick the group with smaller area...

elif Rect.area rect1 < Rect.area rect2 then 0

elif Rect.area rect2 < Rect.area rect1 then 1

// ...lastly pick the group which has smaller amount of entries assigned

elif len1 < len2 then 0

elif len2 < len1 then 1

else 0

As you can see we have actually three criteria used to pick a group - while our area expansion parameter we computed earlier will probably catch 9/10 cases, in cases when it's equal for both groups we then pick group with the smallest area, and in case of equal area sized, by the group length.

Keep in mind: we don't need to really worry about groups being of unbalanced size. Our split function uses group resolution algorithm only until a minimum required capacity is reached. Here we also pick entries that are the most optimal - adding them will result in the least group bounding area expansion - first:

module SplitStrategy

let inline nextQuadratic (entries: #IReadOnlyList<Entry<'k,'v>>) rect1 rect2 len1 len2 =

let mutable idx = 0

let mutable e = entries.[idx]

let (pref1, exp1, pref2, exp2) = preference e rect1 rect2

let mutable maxPreferenceDiff = Math.Abs(pref1 - pref2)

let mutable group = selectGroup rect1 rect2 len1 len2 pref1 pref2

let mutable expanded = if group = 0 then exp1 else exp2

for i in 1..(entries.Count - 1) do

let e = entries.[i]

let struct(pref1, exp1, pref2, exp2) = preference e rect1 rect2

let preferenceDiff = Math.Abs(pref1 - pref2)

// pick the entry that causes the smallest min bounding rectangle expansion

if maxPreferenceDiff <= preferenceDiff then

maxPreferenceDiff <- preferenceDiff

idx <- i

group <- selectGroup rect1 rect2 len1 len2 pref1 pref2

expanded <- if group = 0 then exp1 else exp2

(idx, expanded, group)

While quadratic split provides better quality than its linear equivalent, it's more expensive to produce. General heuristic is that, it's a good pick in cases when R-Tree is fairly static (entries are rarely added), as node splitting algorithm is much more expensive than randomized approach used by linear split.

Element removal

When we're talking about removing items from R-Tree by key, we could consider two cases:

- Remove entry, which key is equal to a given argument.

- Remove all entries with keys, that exists within the boundaries of a given argument.

Here we're only consider a first one. In order to keep our R-Tree balanced, we need to consider a situation, where removing an item may cause node children count to drop below a minimum allowed amount (canonically it's a half of a maximum allowed amount, but it's not a strict rule). If it happens, we need to consider a necessity of merging it with another node.

First let's start from removing entries at a leaf node level.

module Node

let rec remove (config: Config) rect (n: Node<'k,'v>) =

if isLeaf n then

// handle insertion at leaf

// check if current leaf node contains expected entry

let idx =

n.entries

|> Array.findIndex (function Leaf(k,_) -> rect = k.Boundary | _ -> false)

if idx = -1 then

(n, None, [||]) // entry not found in a current leaf node

else

// remove entry from the leaf node

let removed = n.entries.[idx]

let n = { n with entries = Array.removeAt idx n.entries }

(n, Some removed, [||])

else

//TODO: handle insertion at branch

Our Node.remove function returns an altered node, a removed entry (if any) and a list of so-called "orphans" - nodes which due to removal went under a minimum allowed capacity of their parent. We'll need to eventually reinsert them at the end of removal process.

Ok, what about removal at the branch level? Well, there are few things to remember:

- If a minimum bounding rectangle that's a branch key says it contains a key we want to remove, that's just a suggestion not a promise. The item we want to remove in fact may not be there, so we may need to change other nodes. That's also why one of our recursive function parameters is a removed item - so we know if it was actually found.

- If we removed an entry, it may turn out, that we need to recompute minimum bounding rectangle of our branch. However this doesn't happen always, only when the coordinates or removed element are adjacent to edges of a minimum bounding rectangle itself.

With all of these challenges to consider, let's see how our branch removal could look like:

let rec remove (config: Config) rect (n: Node<'k,'v>) =

if isLeaf n then

//TODO: handle insertion at leaf

else

// handle insertion at branch

let mutable entryIdx = -1

let mutable removedOption = None

let mutable entries = Array.copy n.entries

// we're at the branch node, check which child nodes could

// potentially contain item we're looking for

let mutable i = 0

while i < entries.Length do

let e = entries.[i]

let r = Entry.boundary e

if Rect.contains r rect then

// we found a potential matching branch

let (Branch(r, child)) = e

// try to remove an item from that child

let (child, removed, orphans) = remove config rect child

match removed with

| None -> () // item not found

| Some key ->

removedOption <- Some((removed, orphans))

let removedRect: Rect = Entry.boundary key

let r =

// check if removed item's key was adjacent to the

// edges of a minimum bounding rectangle of its

// parent...

if Point.anyMatch removedRect.low r.low ||

Point.anyMatch removedRect.high r.high then

// ...if so, we need to recalculate minimum bounding

// rectangle, as it may have shrunk

child.entries

|> Array.map Entry.boundary

|> Array.reduce Rect.merge

else r

entries.[i] <- Branch(r, child)

if child.entries.Length < config.minCap then

// if removed item caused parent to have less than

// allowed minimum of entries, we'll need to merge it

entryIdx <- i

i <- entries.Length // break;

i <- i + 1

//TODO: remove element from current branch

We covered finding out the entry of a node that may need removal, but the remove itself was not called yet:

module Node

let rec remove (config: Config) rect (n: Node<'k,'v>) =

if isLeaf n then

//TODO: handle insertion at leaf

else

//TODO: index of candidate having a key to remove ...

// remove element from current branch

let n = { n with entries = entries }

match removedOption with

| None -> (n, None, None)

| Some(removed, orphans) when entryIdx < 0 ->

(n, removed, orphans)

| Some(removed, orphans) ->

// after removing the item, parent capacity gone under minimum

// allowed number of children. That parent gets orphaned, and

// will have to be reinserted later on

let orphan = n.entries.[entryIdx]

let n = { n with entries = Array.removeAt entryIdx n.entries }

let orphans = Array.insertAt orphans.Length orphan orphans

(n, removed, orphans)

With our Node.remove function done, we're now ready to wrap it up into something that could be facing user's API:

module RTree

let remove (key: 'k) (tree: RTree<'k,'v>) =

let rect = key.Boundary

let (root, removed, orphans) = Node.remove tree.config rect tree.root

let mutable root =

if root.entries.Length = 1 && not (Node.isLeaf root) then

match root.entries.[0] with

| Branch(_, n) -> n

| _ -> root

else root

// reinsert orphaned nodes

for orphan in orphans do

match orphan with

| Leaf _ ->

root <- insert tree.config 0 orphan root

| Branch(_, n) ->

for e in n.entries do

root <- insert tree.config n.level e root

{ tree with root = root }

A full source for a code snippets above can be found here.

What's next?

Here, we only talked about using R-Tree in context of 2D rectangular spaces. However they can be used in much wider context eg. it's fairly easy to extend our DimStats used in linear split from 2 to any number of dimensions. If we could abstract concepts like contains, intersects, equals, area or merge we could extend our R-Tree to work over any kind of shapes. But that's not everything! If we extend concept of minimum bounding rectangle into a minimum bounding set (data set which compactly describes all nested keys), we can index much wider variety of structures.

In this blog you could often hit on a concept of Vector Clocks - data structures used to timestamp and tracking the concurrency of operations. The main difference is a mindset shift:

- If we can extend 2D space into N-dimensional one we have tools to represent vector clocks. While in 2- or 3D spaces, dimensions were mapped to

x,y(andz) point coordinates, they could be represented as indexes in an array or... replica identifiers of vector timestamps. - Shape-like operations also have their equivalent in vector clocks partial comparison space:

containsandequalsas>=and==intersectsas "is concurrent to" comparisonmergeis just an equivalent of clock merging we discussed in the past

Moreover, with minimum bounding sets we could also index data that's not numeric in nature. As an example let's use PostgreSQL GiST index (which uses this exact approach) for text data. In this case we represent leaf keys as set of words (terms) used within indexed text document, while branch keys are represented as (by default 64bit long) bloom filters:

- For leaf keys,

contains a b/equals a b/intersects a b/merge a boperators are basically the same as traditional Set operators executed over set of words (isSuperSet a b/setEquals a b/nonEmpty(intersect a b)/union a b). - For branch keys,

contains a b/equals a b/intersects a b/merge a boperators could be mapped onto bit mask operations like(a ||| b = a)/(a = b)/(a &&& b <> 0)/(a ||| b).

Usually this approach enables us to create indexes that oftentimes are faster to update (but slower to read) than inverse document indexes used traditionally in full text search solutions.

All of the operations defined above are possible thanks to the fact that - unlike B+Trees - R-Trees don't need to operate on exact linear order. What's missing for the full picture here is definition of split algorithm, as we need a way to represent and calculate the expansion of a minimum bounding set, and that is not always easy to represent in generic manner. For a reference on how to approach it, you could check out the implementation of a split algorithm for text data in Postgres.

Comments